How To Automate Database Migration Testing/Dry-runs in Your CI/CD Pipelines

The most logical starting place is to stand up a new database cluster based on a snapshot, especially if you are using a managed database service like AWS RDS where doing so is easy. However, this still requires some manual steps or some sort of script to automate this via the CLI provided by your cloud provider, and what about your migration job itself? What about teardown? Is there a way to automate all of this? Incorporate this as a part of your CI/CD pipeline?

Is This Even Worth Automating?

I guess this depends upon the risk factor of the migrations you run typically, and whether your migrations tend to manipulate data vs. simply manipulate schema. I would suggest that if you just need to manipulate schema, you probably don't need to even conduct a dry-run sort of test at all, but having a way to simulate your more stressful migrations is always a very nice-to-have, because it sucks when a migration barfs and you have to consider this an outage while you repair tainted data (or at least assess whether your data is tainted). If you are really diligent about wrapping queries in a transaction that can be undone when something goes wrong, perhaps you don't need a solution like this either, but even then it can often be easier to just take the conservative approach by conducting a test like this rather than trusting that the way you've coded your migrations will really provide the safety net you need.

Having automation for your safety net is nice. If you don't have a solution for doing this, by the time you find or build one, test it and document it for next time, you might be able to just put together a more permanent solution you can trust, such as the approach that will be described here:

Consider the Hardware and Load Required by Your Migration(s)

I like using the exact same hardware to really provide as accurate a simulation as possible, or at least hardware with the same memory allocation. This way if you have written some sort of loop or data mapping process that reads data into memory you can assure that if this is destined to run out of memory when deployed to production it will do so during your simulation. Of course, it is much better to rely on a small number of SQL queries wrapped in transactions rather than some sort of row by row loop, but if you can't get around that (or can't afford the time to level up your SQL skills), I suggest at least considering the hardware this will run on.

If you are extremely concerned about the load of these migrations, doing your testing with a completely new database cluster might be best, otherwise simply creating a new database on the same cluster will work. I find more often than not doing the latter is just fine, and that it is best to focus on optimizing your SQL and approach to your migration rather than attempting to coddle your infrastructure. Your database is designed to take a beating, just within reason, and if your migrations have memory consumption issues a new database cluster (with the same hardware specs) is not going to help with this.

The Ingredients

Here are the ingredients you need:

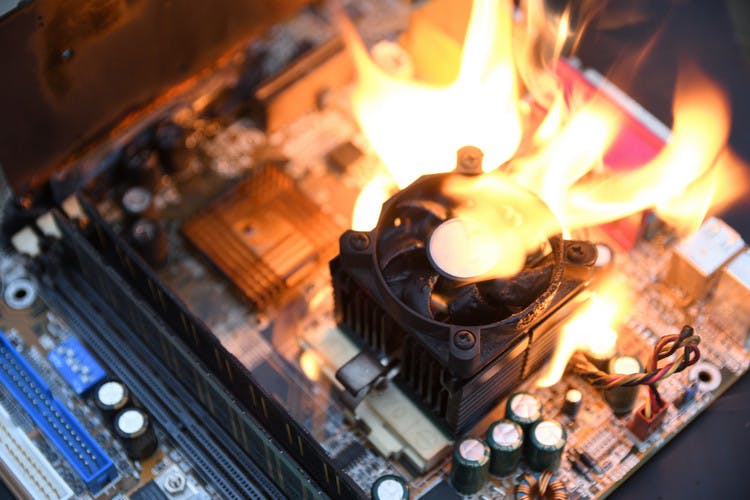

Something to create a copy of your production database, taking into account the question of whether this should be a brand new database cluster or simply a new database on your existing cluster. We will assume the latter for the remainder of this blog post, and will consider the use case to be more about assuring that the migrations will run smoothly without resulting in data integrity issues, as opposed to trying to ensure your underlying infrastructure doesn't explode.

Some sort of variable in your CI/CD pipeline that says "I want these migrations to run on my clone, and I do not want to trigger a code deploy".

The changes in your database migration job and CI/CD pipeline to respect this variable and use this variable to override the normal database used for running the migrations, and not deploy a new application release respectfully.

The Steps

My preferred way to clone the database to another database on the same infrastructure, for example in PostgreSQL, is piping pg_dump output to pg_restore:

pg_dump -h dbhost -U pguser -W -d [your source database] -Fc | pg_restore -h dbhost -U pguser -W -d [your target database]

If you've already run this command before you might have to drop existing connections and drop and recreate the database. Since you will likely need this command to be non-interactive, you'll need either a .pgpass file, or environment variables containing this info so you can remove the -h, -U, and -W arguments.

How a variable is created for your CI/CD pipeline is obviously CI/CD platform specific, I'll leave that with you. We like to use Kubernetes Helm for deployments, and with Helm we setup our database migrations as a pre-upgrade chart hook. If your setup is similar, you can create a new hook to run the above SQL with a weight lower than your normal database migration hook so that it runs before your migration.

Then, all you need to do is setup another variable containing the name of your database clone (i.e. production_clone as per our above example), and feed this value to your migration job as an override to the normal database that would be used for your migration. Of course this is definitely worth testing in your non-production environment, and the beauty of this approach is that you'll of course have this same database migration setup in your non-production we can piggyback off of. Using variables passed into Helm you can easily handle these workflow modifications, including skipping the deployment when your boolean "do the dry run instead" variable is set.

Unfortunately, if you are using Helm there is no way to output the contents of these logs while these jobs run if you want to watch the output in your CI/CD pipeline, although there is a proposed issue to add this feature to Helm. For now, you'll have to rely on the "no failure = success" Linux/Unix convention and retrieve your logs via kubectl to troubleshoot any issues.

If you are not using Kubernetes or Helm, the same flows here should work for you, you'll just need to adapt them to your situation. For example, you could create a shell script to issue a psql command to run the above query, or set this up in whatever coding framework you use (e.g. in Rails this could be a Rake task).

As far as teardown goes, you could drop your database clone, but since you've already allocated the storage resources for the clone, there aren't going to be any cost savings to tearing down now vs. just leaving this database clone dormant. We prefer to drop before re-cloning rather than dropping on teardown, just to front load any issues that might exist with this step (such as dropping existing connections).

Don't Let This Clone Tempt You

Because this clone doesn't provide any data privacy tooling, it is not a good idea to just start using this for general purpose troubleshooting and testing purposes. For one, it's on the same infrastructure so you'd have to be mindful about your experimentation causing load issues that impact production traffic, and you'd also have to sort out access issues to create users that have access to this clone but not the master database. The big reason in my mind here though, is that without a data privacy solution you are exposing all of the sensitive/confidential information in the production database, including its PII to your users. You may trust these users with your life, but data breaches and leaks are almost always statistically the result of human error, so it is far better to not risk a data leak or copies spawning more copies throughout your organization that have to be accounted for, especially since there is no reason you need to accept this risk with an adequate data privacy solution.

The Complete Turnkey Solution

If you are looking for the easiest approach to this that aligns with the above approach, check out Redactics. Their most recent release includes a workflow for handling the above database migration recipe, it just requires plugging in to your current setup. Additionally, it also provides additional workflows to provide data privacy solutions, including another workflow for creating a PII-free database clone that auto-updates with delta updates going forward. It is very convenient to use the same software for multiple use cases, as once you have this installed in your infrastructure using additional workflows is a very easy lift.